Deployment of Edge-AI Infrastructure for Autonomous Vehicles

Deployment of Edge-AI Infrastructure for Autonomous Vehicles

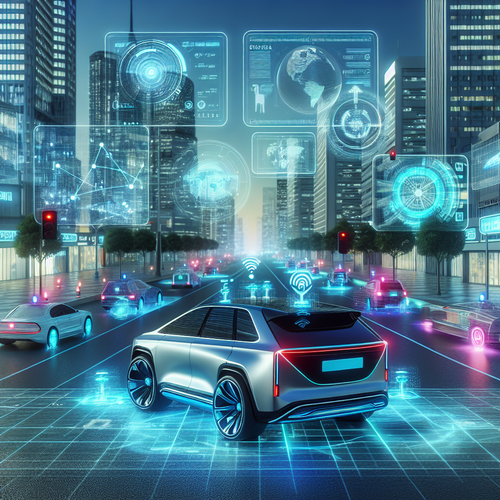

Autonomous vehicles rely heavily on artificial intelligence (AI) to make real-time decisions that enhance safety and efficiency. With the ever-growing amount of data generated by sensors and cameras on these vehicles, deploying edge-AI infrastructure is becoming an essential requirement. This tutorial will guide you through the necessary steps to effectively deploy edge-AI infrastructure for autonomous vehicles.

Prerequisites

- Basic understanding of AI and machine learning concepts.

- Familiarity with cloud computing and edge computing.

- Knowledge of IoT systems and their integration.

Step-by-Step Guide

Step 1: Understand the Need for Edge-AI

The primary reason for deploying edge-AI infrastructure is to enable real-time data processing. Autonomous vehicles generate massive amounts of data that need immediate analysis. Processing this data at edge locations reduces latency, ensures swift decision-making, and minimizes dependency on cloud services.

Step 2: Identify the Key Components

The core components of edge-AI infrastructure for autonomous vehicles include:

- Edge Devices: Sensors, cameras, and onboard computers that process data near the source.

- Connectivity: Reliable communication networks (such as 5G) that connect vehicles to local processing units.

- AI Models: Pre-trained models optimized for real-time inference.

Step 3: Setting Up Edge Devices

Begin by selecting suitable edge devices capable of handling AI workloads. Consider the following:

- Processing Power: Devices should have enough processing capabilities (CPU, GPU, or specialized AI chips) to perform real-time analysis.

- Power Efficiency: Since these devices will be embedded in vehicles, their power consumption must be efficient.

- Robustness: Equipment must withstand various environmental conditions.

Step 4: Communication Infrastructure

Implement robust communication systems to ensure seamless data transmission between the vehicle and edge servers. Key aspects include:

- Low-Latency Networks: Use 5G or dedicated Short-Range Communications (DSRC) for reliable bandwidth.

- Redundancy: Design redundant communication paths to enhance connectivity reliability in various scenarios.

Step 5: Model Deployment

Deploy your AI models onto the edge devices. You may need to:

- Optimize models for low latency and smaller footprint.

- Use frameworks such as TensorFlow Lite, ONNX, or NVIDIA TensorRT for deployment.

Step 6: Testing and Validation

Once deployed, conduct rigorous testing under different driving conditions. Focus on:

- Validating the accuracy of real-time decisions made by the AI system.

- Ensuring the system’s reliability over extended periods.

- Monitoring performance and resource usage during operation.

Troubleshooting Tips

If you encounter issues during deployment or testing:

- Check network connectivity and latency issues.

- Ensure the edge device firmware and software are up to date.

- Analyze performance metrics to identify bottlenecks.

Conclusion

Deploying edge-AI infrastructure for autonomous vehicles is crucial for enhancing their operational capabilities. By following the steps outlined above, you can ensure that your autonomous systems are equipped to make swift and reliable decisions in real-time. Regular monitoring and updates will further improve system efficiency and safety.

For more on AI in transportation, consider checking out our guide on TinyML and AI at the Edge to gain insights into maximizing edge intelligence.