{{ $('Map tags to IDs').item.json.title }}

Introduction to Kafka for Event Streaming

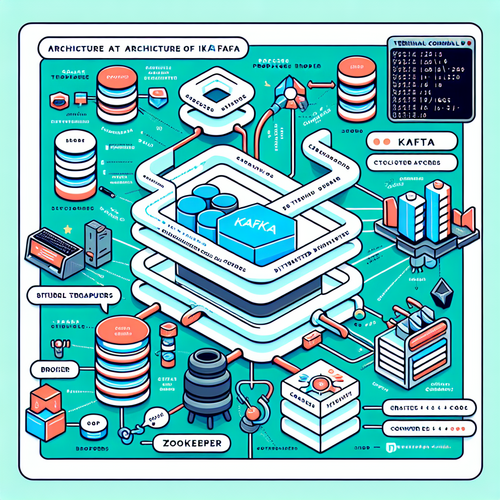

Apache Kafka is a widely adopted distributed event streaming platform used for building real-time data pipelines and streaming applications. It is known for its durability, scalability, and performance, making it suitable for processing high volumes of data. This tutorial will introduce you to Kafka and guide you through the steps to set it up and use it for event streaming.

1. Key Features of Kafka

- High Throughput: Kafka can handle large volumes of data with low latency.

- Durability: Messages are stored on disk, allowing recovery in case of failures.

- Scalability: Kafka can be run on clusters to handle increased workloads and parallel processing.

- Real-Time Processing: Supports real-time data streaming and processing.

2. Installing Kafka

To get started with Kafka, you first need to have Java (version 8 or above) installed. Check if Java is installed with:

java -versionIf Java is not installed, install it with:

sudo apt install openjdk-11-jdkNext, download Kafka from the official website:

wget https://downloads.apache.org/kafka/2.8.0/kafka_2.12-2.8.0.tgzExtract the downloaded file:

tar -xzf kafka_2.12-2.8.0.tgzNavigate to the Kafka directory:

cd kafka_2.12-2.8.03. Starting Kafka Server and Zookeeper

Kafka requires Zookeeper to manage distributed brokers. Start Zookeeper with the following command:

bin/zookeeper-server-start.sh config/zookeeper.propertiesIn another terminal, start Kafka Broker:

bin/kafka-server-start.sh config/server.propertiesEnsure both services are running successfully.

4. Creating a Topic

With Kafka running, you can create a topic to organize your messages. Run:

bin/kafka-topics.sh --create --topic my-topic --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1This creates a topic named my-topic with one partition.

5. Sending Messages to the Topic

You can publish messages to your topic using the console producer:

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic my-topicType your messages in the terminal, and press Enter to send them.

6. Receiving Messages from the Topic

To read messages, open another terminal and run the console consumer command:

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic --from-beginningThis will display the messages sent to my-topic.

7. Monitoring and Managing Kafka

Use tools like Kafka Manager or Confluent Control Center for monitoring and managing your Kafka cluster effectively.

8. Conclusion

By following this tutorial, you have successfully set up Apache Kafka for event streaming. Kafka is a powerful solution for handling large volumes of real-time data, making it ideal for distributed systems and complex data architectures. Continue to explore Kafka’s capabilities, including stream processing with Kafka Streams or integrating with other data tools!